Google has announced the beta release of its Cloud Vision API, a set of tools to allow systems to see and recognise the world around them.

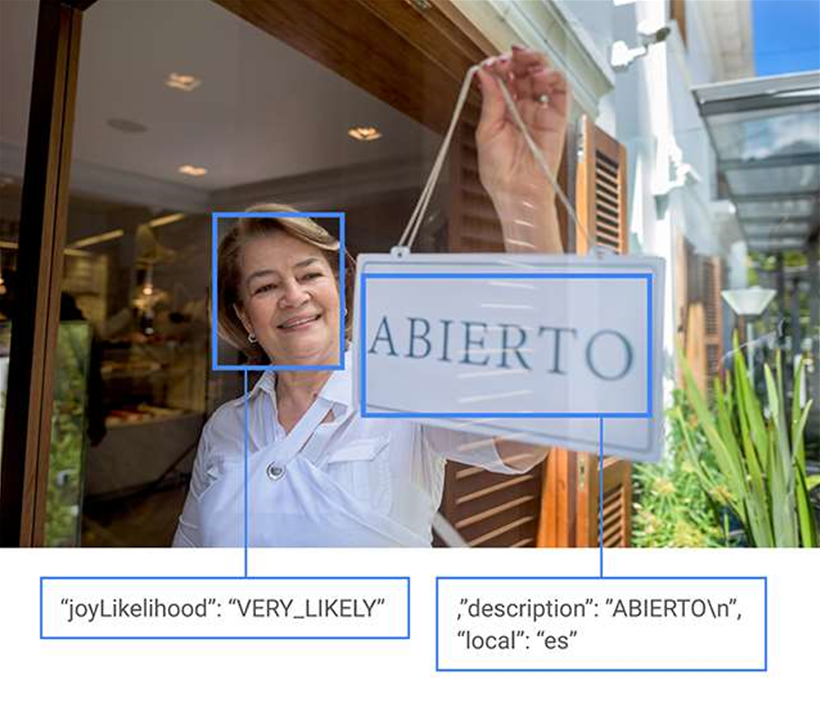

Leveraging technology used in the Google Photos app, the software will provide the ability to recognise objects in images, filter inappropriate content (in conjunction with Google SafeSearch), analyse emotional attributes of people in images and perform optical character recognition (OCR) and automatic language identification.

The power of this platform lies in the massive amounts of data that users upload and the constant analysis of websites, allowing the tech giant to continuously improve its recognition capabilities.

The technology has been demonstrated by Google in a robot that used Google Cloud Vision to navigate obstacles.

During the beta timeframe, each user will have a quota of 20 million images per month. Google stated in a blog post that during this time, “Cloud Vision API is not intended for real-time mission critical applications.”

That said, the ramifications of this technology could have a dramatic impact on the Internet of Things.

Such a system in the future may be able to analyse a live video feed in a department store and identify customers and stock levels, without the need to interface with smartphones or sensors, for example.

Alternatively, a Cloud Vision-equipped system could identify when there are a large number of customers in the store (due to yearly sales, for example) and send a notification to management to increase staff levels.